Artificial Intelligences are not people. They don’t think in the way that people do and it is misguided, or even possibly dangerous, to anthropomorphise them. But it can be helpful to consider the similarities between humans and AIs from one perspective—their roles in society.

Regardless of the differences between AI models and human brains, AIs are increasingly interacting with and impacting people in the real world, including taking on roles traditionally performed by humans. Unlike previous technologies that have influenced and reshaped society, AIs are able to reason, make decisions and act autonomously, which feels distinctly human. The more we adopt and come to depend on them in everyday life, particularly those with pretty and intuitive interfaces, the easier it is to expect them to operate like people do. Yet at their core, they don’t.

AIs have a place in human society, but they aren’t of it, and they won’t understand our rules unless explicitly told. Likewise, we need to figure out how to live with them. We need to define some AI equivalents to the rules, values, processes and standards that have always applied to people. These structures have built up and evolved over the long history of humanity, and society is complex, containing many unwritten rules, such as ‘common sense’, that we still struggle to explain today. So, we will need to do some clever thinking about this.

Addressing these challenges should start with getting the right people thinking about it. We usually consult technologists and the technology sector for AI-related topics, but they cannot—and should not—be responsible for solving all of the challenges of AI. Last year, international thought leaders in AI called for a pause on technical AI research and development, which might be justified to give our governments and wider society time to adapt. However, unless we get much broader participation in AI by those same communities, any pause simply delays the inevitable and can give adversaries a lead in shaping the emerging technology landscape away from our interests.

So, let’s think more holistically about how to grow AIs into productive contributors to society. One way to do this is to consider some illustrative similarities with raising children.

When parents first decide to have children, a significant amount of time, effort and money can go into making a baby, with each child taking a fixed time (around nine months) to physically create. But the far greater investment comes after the birth as the family raises the child into an adult over the next 20 or more years.

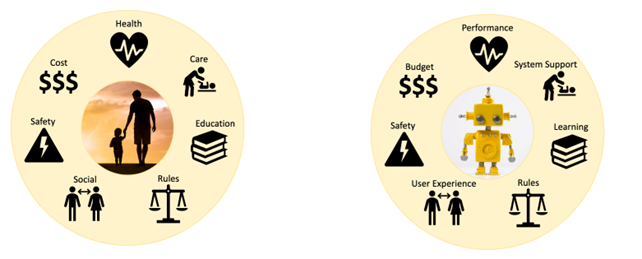

Figure 1: Some considerations for raising a human vs raising an AI.

Raising kids is not easy—parents have an enormous range of decisions to make about a child’s health, education, social skills, safety, and the appropriate rules and boundaries at each stage as it develops into a well-adjusted and productive member of society. Over time, society has created institutions, laws, frameworks and guidance to support parents and help them make the best decisions they can for their families.

These include institutions such as paediatric hospitals, schools, and childcare facilities. We have child welfare and protection laws. Schools use learning curricula from K-12 and books that are ‘age appropriate’. We have expected standards for behavioural, social and physical development as children grow. Parents are provided diet recommendations (the ‘food pyramid’) and vaccination schedules for their child’s health and wellbeing. Entire industries make toys, play equipment and daily objects that are safe for children to use and interact with.

Yet in the case of AI, we are collectively preoccupied with being pregnant.

Most of the discussions are about making the technology. How do we create it? Is the tech fancy enough? Is the algorithm used to create the model the right choice? Certainly, a large effort is needed to create the first version of a new model. But once that initial work is done, software can be cloned and deployed extremely quickly unlike with a child. This means the creation is an important, but relatively small, part of an average AI’s lifespan, while the rearing phase is long and influential, and gets comparatively overlooked in planning.

A good way to start thinking about ‘raising’ AIs is by recognising that the institutions, frameworks and services that society has for young, growing humans will have equivalents for young, growing AIs, only with different names.

So, instead of talking about the health of an AI, we’re really talking about whether its performance is fit for its purpose. This can include whether we have the right infrastructure or clean data for the problem. Is the data current and appropriate? Do we have enough computing power, responsiveness and resilience built into the system for it to make decisions in the timeframes needed—for example, milliseconds in the case of real-time operations for drone piloting as opposed to hours, days or weeks for research results? Are we updating the model regularly enough or have we let the model drift over time? We can also inoculate AI systems against some kinds of damage or manipulation, in a similar way to vaccinating for diseases, by using small amounts of carefully crafted ‘bad’ data called adversarial examples to build resistance into machine learning models.

Rather than care and feeding in the case of humans, we talk about system support and maintenance over the AI’s lifetime. This includes how we check in to make sure it’s still doing well and make adjustments for different stages of its development. We need to ensure it has enough power and cooling for the computations we want it to make, that it has sufficient memory and processing, and that we are managing and securing the data appropriately. We need to know what the software patching lifecycle will look like, and for how long we expect the system to live, noting the rapid pace of technology. This is closely related to budget, because upfront build costs can be eclipsed by the costs of supporting a system over the long term. Keeping runtime costs affordable and reducing the environmental impact might mean we need to limit the scope of some AIs, to ensure they deliver value for the users.

In place of education, we consider machine learning and the need to make sure that what an AI is learning is accurate, ‘age appropriate’ (depending on whether we are giving it simple or complex tasks), and is using expert sources of information rather than, for example, random Internet blogs as references. Much like a young child, early AIs such as the first version of ChatGPT (based on GPT-3) were notorious for making things up or ‘hallucinating’ based on what it thought the user wanted to hear. Systems, like people, need to learn from their experiences and mistakes to grow to have more nuanced, informed views.

Rules in AIs are the boundaries we set for what we want them to do and how we want them to work—when we allow autonomy, when we won’t, how we want them to be accountable—much like for people. Many rules around AI talk about explainability and transparency, worthy goals to understand how a system works but not always as helpful as they sound. Often, they refer to being able to, for instance, recreate and observe the state of a model at a specific point in time, comparable to mapping how a thought occurs in the brain by tracing the electrical pathways. But neither of these is great at explaining the reason for, or the fairness of, that decision. More helpfully, a machine learning model can be run thousands of times over and assessed by its results in a range of scenarios to determine if it is ‘explainably fair’, and then tweaked so that we’re happier with the answers. This form of correction or rehabilitation is ultimately more useful for serving the real-world needs of people than the description of a snapshot of memory.

User experience (UX) and user interface design are similar to growing children’s social skills, enabling them to communicate and explain information and assessments they’ve made in a way that others can understand. Humans, at the best of times, aren’t particularly comfortable with statistics and the language of mathematics, so its critically important to design intuitive ways for people to interpret an AI’s output, in particular taking into account what the AI is, and isn’t, capable of. It’s easier with young humans because we each have an innate understanding of children, given we were once children ourselves. When we interact with them, we know what to expect. This is less true of Ais—we don’t trust a machine in the way we trust humans because we may not understand its reasoning, biases or exactly what it is attempting to say. We also don’t necessarily communicate in a way that the AI needs either. Engaging with ChatGPT has taught us to change how we ask questions and be very specific in defining the problem and its boundaries. This is a challenge for developers and society where both sides need to learn and improve.

Safety can be thought of in several ways in the context of AI. It can mean the safety of the users—whether that’s their physical or financial safety from bad decisions by the AI, or their susceptibility to being negatively influenced by it. But it also means the protection of the AI itself against damage or adverse effects. The concept of digital safety in an online world is understood (if not practised) by the public, and much discussion about AI safety in the media is dedicated to the risk that an AI ‘goes rogue’. Care must be taken to mitigate against unintended consequences when we rely on AIs, particularly for things they’re not well designed for. But far too little time is dedicated to AI security, and considering whether an AI can be actively manipulated or disrupted. Active exploitation of an AI by a malicious adversary seeking to undermine social structures, values and safeguards could have an enormous impact, particularly when we consider the potential reach of large-scale AIs or AIs embedded into critical systems. We will need to use adversarial machine learning, penetration testing, red-teaming and holistic security assessments (including physical, personnel, data and cyber security) to identify vulnerabilities, monitor for bad actors and make our AIs as difficult as possible to exploit. AI security will be a critical growth area in years to come and a focus for defence and national security communities around the world.

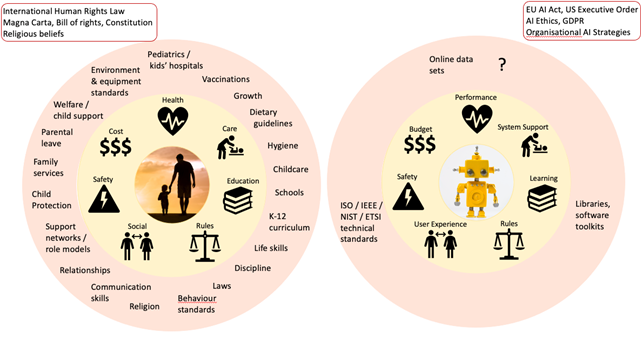

Figure 2: Laws, frameworks and guidelines for raising a human vs raising an AI.

Much of the time when we discuss AI, we either talk about ethics and safety at a high level—such as through transparency, accountability and fairness—or we talk about algorithms, deep in the weeds of the technology. All these aspects are important to understand. But what’s largely missing in the AI discussion is the space in between, where we need to have guidance and frameworks for AI developers and customers.

In the same way we would not expect (nor want) technologists to make decisions about child welfare laws, we should not leave far-reaching decisions about the use of AI to today’s ‘AI community’, which is almost exclusively scientists and engineers. We need teachers, lawyers, philosophers, ethicists, sociologists and psychologists to help set bounds and standards that shape what we want AI to do, what we keep in human hands and what we want a future AI-enabled society to look like.

We need entrepreneurs and experts in the problem domains, project managers, finance and security experts who know how to deliver what is needed, at a price and risk threshold that we can accept. And we need designers, user experience (UX) specialists, and communicators to shape how an AI reports its results, how those results are interpreted by a user and how we can do this better so that we head off misunderstandings and misinformation.

There are many lessons for technology from the humanities. Bringing people together from across all these disciplines, not just the technical ones, must form a part of any strategy for AI. And this must come with commensurate investment. Scholarships and incentives in academia for AI research should be extended well beyond the STEM disciplines. They should help develop and support new multidisciplinary collaborative groups to look at AI challenges, such as the Australian Society for Computers & Law.

Just as it takes a village to raise a child, the governing of AI needs to be a multidisciplinary village so that we can raise AIs that are productive, valued contributors to society.